Image & Video Forensics

Multimedia Data is an integral component of our daily communication. Smartphones and other low-cost consumer devices allow to view, capture, and share images, video and audio in real time and with minimum effort. Captured multimedia content is used for communication, entertainment, or record-keeping.

From a security perspective, these wide-spread use cases raise questions of authenticity and origin of controversial content. Our group provides algorithmic tools to these common questions on multimedia content:

- has an image or video been edited, and if so, how, where, and to what extend?

- is it possible to link an image or video to a particular user?

- what information can be forensically retrieved from degraded multimedia data

or physical documents? - how can multimedia content be actively armored to support post-hoc analysis

of manipulations or attribution?

A quick collection of our past and present lines of research:

- Detection of DeepFakes and Face2Face-manipulated videos

- Estimation of the direction of incident light

- Video analysis with noise residuals

- Estimation of the color of the illuminant

- Format-based manipulation detection

- Copy-move forgery detection

Recent publications:

EUSIPCO 2022: Compliance Challenges in Forensic Image Analysis Under the Artificial Intelligence Act

In many applications of forensic image analysis, state-of-the-art results are nowadays achieved with AI methods. However, concerns about their reliability and opaqueness raise the question whether such methods can be used in criminal investigations from a legal perspective. In April 2021, the European Commission proposed the artificial intelligence act, a regulatory framework for the trustworthy use of AI. Under the draft AI act, high-risk AI systems for use in law enforcement are permitted but subject to compliance with mandatory requirements. In this paper, we summarize the mandatory requirements for high-risk AI systems and discuss these requirements in light of two forensic applications, license plate recognition and deep fake detection. The goal of this work is to raise awareness of the upcoming legal requirements and to point out avenues for future research.

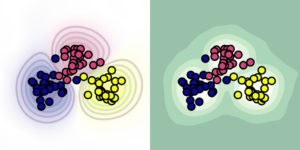

IEEE SPL 2021: Reliable Camera Model Identification Using Sparse Gaussian Processes

Identifying the model of a camera that has captured an image can be an important task in criminal investigations. Many methods assume that the image under analysis originates from a given set of known camera models. In practice, however, a photo can come from an unknown camera model, or its appearance could have been altered by unknown post-processing. In such a case, forensic detectors are prone to fail silently.

One way to mitigate silent failures is to use a rejection mechanism for unknown examples. In this work, we propose Gaussian processes (GPs), which intrinsically provide such a rejection mechanism. This makes GPs a potentially powerful tool in multimedia forensics, where forensic analysts regularly work on images from unknown origins.

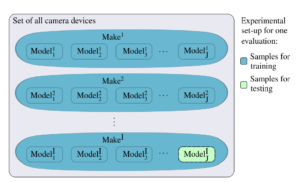

MMForWild 2020: The Forchheim Image Database for Camera Identification in the Wild

For a fair ranking of image provenance algorithms, it is important to rigorously assess their performance on practically relevant test cases. We argue that there is a gap in existing databases: none of the existing forensic benchmark datasets simultaneously a) allows cleanly separating scene content and forensic traces, and b) supports realistic post-processing like social media recompression. We propose the Forchheim Image Database (FODB) to close this gap. It consists of more than 23,000 images of 143 scenes by 27 smartphone cameras, and allows cleanly separating content from forensic artifacts. Each image is provided in original quality, and five copies from social networks. We demonstrate the usefulness of FODB in an evaluation of methods for camera identification, and report three findings: First, EfficientNet remarkably outperforms dedicated forensic CNNs both on clean and compressed images. Second, classifiers obtain a performance boost even on unknown post-processing after augmentation by artificial degradations. Third, FODB’s clean separation of scene content and forensic traces imposes important, rigorous boundary conditions for algorithm benchmarking.

For a fair ranking of image provenance algorithms, it is important to rigorously assess their performance on practically relevant test cases. We argue that there is a gap in existing databases: none of the existing forensic benchmark datasets simultaneously a) allows cleanly separating scene content and forensic traces, and b) supports realistic post-processing like social media recompression. We propose the Forchheim Image Database (FODB) to close this gap. It consists of more than 23,000 images of 143 scenes by 27 smartphone cameras, and allows cleanly separating content from forensic artifacts. Each image is provided in original quality, and five copies from social networks. We demonstrate the usefulness of FODB in an evaluation of methods for camera identification, and report three findings: First, EfficientNet remarkably outperforms dedicated forensic CNNs both on clean and compressed images. Second, classifiers obtain a performance boost even on unknown post-processing after augmentation by artificial degradations. Third, FODB’s clean separation of scene content and forensic traces imposes important, rigorous boundary conditions for algorithm benchmarking.

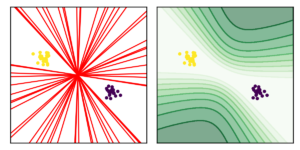

IEEE WIFS 2020: Reliable JPEG Forensics via Model Uncertainty

Many methods in image forensics are sensitive to varying amounts of JPEG compression. To mitigate this issue, it is either possible to a) build detectors that better generalize to unknown JPEG settings, or to b) train multiple detectors, where each is specialized to a narrow range of JPEG qualities. While the first approach is currently an open challenge, the second approach may silently fail, even for only slight mismatches in training and testing distributions. To alleviate this challenge, we propose a forensic detector that is able to express uncertainty in its predictions. This allows detecting test samples for which the training distribution is not representative. The applicability of the proposed method is evaluated on the task of detecting JPEG double compression.

DFRWS EU 2020: Towards Open-Set Forensic Source Grouping on JPEG Header Information

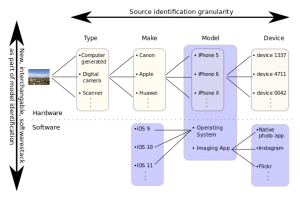

Information on the model and make of the device that was used to produce an image, is a helpful cue in many digital investigations. Such information can, help refute the hypothesis that an illegal photograph was produced using the personal device of a suspect, for instance. Grouping images by provenance can be done in different ways. Based on the encouraging insights from previous works, we consider the grouping of JPEG images by their file headers. Due to the ongoing development of new camera models and the practical difficulty to keep a model database up-to-date, we propose to treat image provenance as an open-set classification problem with the goal to predict the make of a previously unseen camera model. We show that such a prediction can performe remarkably well, with median accuracies beyond 90% for each make. In a additional experiments with postprocessed images, we achieve a median accuracy of about 55% and 75% for desktop software and for smartphone apps, respectively.

Paper: Towards Open-Set Forensic Source Grouping on JPEG Header Information

IEEE ICASSP 2020: Depth Map Fingerprinting and Splicing Detection

Many of today’s pictures are taken by smartphones, which offer new opportunities for forensic analysis. A growing amount of commodity devices are able to capture 3-D information, e.g. via stereo imaging or structured light. Modern smartphones commonly save such 3-D information as depth maps alongside regular images.

In this work, we propose to use characteristic artifacts of depth reconstruction algorithms as a forensic trace. The proposed method infers the source algorithm of stereo reconstructions with an accuracy of up to 97%. On real smartphone data, the method distinguishes patches from different sources with an AUC of up to 0.88, and we show that the method can also be used for splicing localization in depth maps.

Paper: Depth Map Fingerprinting and Splicing Localization

IH&MMSec 2019: Image Forensics from Chroma Subsampling of High-Quality JPEG Images

This work presents a novel artifact that can be used to fingerprint the JPEG compression library. The artifact arises from chroma subsampling in one of the most popular JPEG implementations. Due to integer rounding, every second column of the compressed chroma channel appears on average slightly brighter than its neighboring columns, which is why we call the artifact a chroma wrinkle. We theoretically derive the chroma wrinkle footprint in DCT domain, and use this footprint for detecting chroma wrinkles.

DFRWS EU 2019: Forensic Source Identification using JPEG Image Headers: The Case of Smartphones

Tracing sources of images, e.g., model, make or individual device, is a common problem in forensics. File headers provide the first clue. They are quickly parsed, and are thus of special interest in mining through tons of data. In this work, we analyze images that were taken with smartphones, which are much more flexible than traditional cameras. They are updated and users chose the imaging app. We show that these software dynamics influence the header configuration of images. We then use machine learning to characterize and fingerprint header configurations associated with particular software settings.

Paper: Forensic Source Identification using JPEG Image Headers – The Case of Smartphones

IVFWS @ IEEE WACV 2019: Exploiting Visual Artifacts to Expose Deepfakes and Face Manipulations

High quality face editing in videos is a growing concern and spreads distrust in video content. However, upon closer examination, many face editing algorithms exhibit artifacts that resemble classical computer vision issues that stem from face tracking and editing. As a consequence, we wonder how difficult it is to expose artificial faces from current generators? To this end, we review current facial editing methods and several characteristic artifacts from their processing pipelines. We also show that relatively simple visual artifacts can be already quite effective in exposing such manipulations, including Deepfakes and Face2Face. Since the methods are based on visual features, they are easily explicable also to non-technical experts. The methods are easy to implement and offer capabilities for rapid adjustment to new manipulation types with little data available. Despite their simplicity, the methods are able to achieve AUC values of up to 0.866.

paper

IEEE ICASSP 2019: Towards Learned Color Representations for Image Splicing Detection

Since the rise of social media, it is an ongoing challenge to devise forensic approaches that are highly robust to common processing operations such as JPEG recompression and downsampling. In this work, we make a first step towards a novel type of cue for image splicing. It is based on the color formation of an image: we assume that the color formation is a joint fingerprint for the camera hardware, the software settings, and the depicted scene. As such, it can be used to locate spliced patches that originally stem from other images. To this end, we train a two-stage classifier on the full set of colors from a Macbeth color chart, and compare two patches for their color consistency. Our results on a challenging dataset on downsampled splices created from identical scenes indicate that the color distribution can be a useful forensic tool that is highly resistant to JPEG compression.

Towards Learned Color Representations for Image Splicing Detection

GI Sicherheit 2018: Towards Forensic Exploitation of 3-D Lighting Environments in Practice

A well-known physics-based approach for image forensics is to validate the distribution of incident light on objects of interest. Inconsistent lighting environments are considered as an indication of image splicing. However, one drawback of this approach is that it is quite challenging to use it in practice. In this work, we propose several practical improvements to this approach. First, we propose a new

way of comparing lighting environments. Second, we present a factorization of the overall error into its individual contributions, which shows that the biggest error source are incorrect geometric fits.

Third, we propose a confidence score that is trained from the results of an actual implementation. The confidence score allows to define an implementation- and problem-specific threshold for the

consistency of two lighting environments.

paper

IEEE ICIP 2017: Residual-Based Forensic Comparison of Video Sequences

Recording videos and sharing them with the world, on platforms like YouTube, has become easier than ever before. With that swift development, a demand for verifying authenticity of multimedia content has grown alike, for example to battle “Fake News”. This work suggests two applications for assessing videos. The first application helps to detect spliced content, for example if a person from a green-screen recording was copied into a different video. The second application examines, if the investigated video frames belong to the same source, opening the possibility to distinguish if frame sequences stem from different videos, or not.

Paper: Residual-Based Forensic Comparison of Video Sequences

TyrWS 2017: Illumination Analysis in Physics-based Image Forensics: A Joint Discussion of Illumination Direction and Color

Illumination direction and color are two physics-based forensic cues that are based on the same underlying model. In this work, we discuss these methods in the light of their joint physical model, with a particular focus on the limitations and a qualitative study of failure cases of these methods. Our goal is to provide directions for future research to further reduce the list of constraints that these methods require in order to work. We hope that this eventually broadens the applicability of physics-based methods, and to spread their main advantage, namely their stringent models for deviations of the expected image formation.

paper

MTAP 2016: Handling Multiple Materials for Exposure of Digital Forgeries using 2-D Lighting Environments

The distribution of incident light is an important physics-based cue for exposing image manipulations. If an image has been composed from multiple sources, it is likely that the illumination environments of the spliced objects differ. Johnson and Farid introduced a proof-of-principle algorithm for a forensic comparison of lighting environments. However, this baseline approach suffers from relatively strict assumptions that limit its practical applicability. In this work, we address one of the biggest limitations, namely the need to compute a lighting environment from patches of homogeneous material. To compute a lighting environment from multiple-color surfaces, we propose a method that we call “intrinsic contour estimation” (ICE). ICE is able to integrate reflectances from multiple materials into one lighting environment, as long as surfaces of different materials share at least two similar normal vectors. We validate the proposed method in a controlled ground-truth experiment on two datasets, with light from three different directions. These experiments show that using ICE can improve the median estimation error by almost 50%, and the mean error by almost 30%.

paper

IEEE TIFS 2013: Exposing Digital Image Forgeries by Illumination Color Classification

In this paper, we investigate image splicing. We propose a forgery detection method that exploits subtle inconsistencies in the color of the illumination of images. Our approach is machine-learning-based and requires minimal user interaction. The technique is applicable to images containing two or more people and requires no expert interaction for the tampering decision. To achieve this, we incorporate information from physics- and statistical-based illuminant estimators on image regions of similar material. From these illuminant estimates, we extract texture- and edge-based features which are then provided to a machine-learning approach for automatic decision-making.

paper

IEEE TIFS 2012: An Evaluation of Popular Copy-Move Forgery Detection Approaches

A copy-move forgery is created by copying and pasting content within the same image, and potentially postprocessing it. In recent years, the detection of copy-move forgeries has become one of the most actively researched topics in blind image forensics. A considerable number of different algorithms have been proposed focusing on different types of postprocessed copies. In this paper, we aim to answer which copy-move forgery detection algorithms and processing steps (e.g., matching, filtering, outlier detection, affine transformation estimation) perform best in various postprocessing scenarios. The focus of our analysis is to evaluate the performance of previously proposed feature sets. We achieve this by casting existing algorithms in a common pipeline. In this paper, we examined the 15 most prominent feature sets. We analyzed the detection performance on a per-image basis and on a per-pixel basis. We created a challenging real-world copy-move dataset, and a software framework for systematic image manipulation. Experiments show, that the keypoint-based features SIFT and SURF, as well as the block-based DCT, DWT, KPCA, PCA and ZERNIKE features perform very well. These feature sets exhibit the best robustness against various noise sources and downsampling, while reliably identifying the copied regions.

paper

DAGM and OAGM Pattern Recognition Symposium 2012: Automated Image Forgery Detection through Classification of JPEG Ghosts

We present a method for automating the detection of the so-called JPEG ghosts. JPEG ghosts can be used for discriminating single-and double JPEG compression, which is a common cue for image manipulation detection. The JPEG ghost scheme is particularly well-suited for non-technical experts, but the manual search for such ghosts can be both tedious and error-prone. In this paper, we propose a method that automatically and efficiently discriminates single- and double-compressed regions based on the JPEG ghost principle. Experiments show that the detection results are highly competitive with state-of-the-art methods, for both, aligned and shifted JPEG grids in double-JPEG compression.

paper

IEEE WIFS 2010: On Rotation Invariance in Copy-Move Forgery Detection

The goal of copy-move forgery detection is to find duplicated regions within the same image. Copy-move detection algorithms operate roughly as follows: extract blockwise feature vectors, find similar feature vectors, and select feature pairs that share highly similar shift vectors. This selection plays an important role in the suppression of false matches. However, when the copied region is additionally rotated or scaled, shift vectors are no longer the most appropriate selection technique. In this paper, we present a rotation-invariant selection method, which we call Same Affine Transformation Selection (SATS). It shares the benefits of the shift vectors at an only slightly increased computational cost. As a byproduct, the proposed method explicitly recovers the parameters of the affine transformation applied to the copied region.

paper

Information Hiding 2010: Scene Illumination as an Indicator of Image Manipulation

We propose illumination color as a new indicator for the assessment of image authenticity. Many images exhibit a combination of multiple illuminants (flash photography, mixture of indoor and outdoor lighting, etc.). In the proposed method, the user selects illuminated areas for further investigation. The illuminant colors are locally estimated, effectively decomposing the scene in a map of differently illuminated regions. Inconsistencies in such a map suggest possible image tampering. Our method is physics-based, which implies that the outcome of the estimation can be further constrained if additional knowledge on the scene is available. Experiments show that these illumination maps provide a useful and very general forensics tool for the analysis of color images.

paper

GI Sicherheit 2010: A Study on Features for the Detection of Copy-Move Forgeries

One of the most popular image forensics methods is the detection of copy-move forgeries. In the past years, more than 15 different algorithms have been proposed for copy-move forgery detection. So far, the efficacy of these approaches has barely been examined. In this paper, we: a) present a common pipeline for copy-move forgery detection, b) perform a comparative study on 10 proposed copy-move features and c) introduce a new benchmark database for copy-move forgery detection. Experiments show that the recently proposed Fourier-Mellin features perform outstandingly if no geometric transformations are applied to the copied region. Furthermore, our experiments strongly support the use of kd-trees for the matching of similar blocks instead of lexicographic sorting.

paper